Many businesses struggle with challenges such as slow pipeline execution, rising cloud infrastructure costs, and difficulties in scaling efficiently to meet growing demands. In this case, the customer initially reached out to address a pivotal deployment issue, which served as the starting point for a broader collaboration focused on optimizing their infrastructure.

The Customer

A mid-sized fintech company. The customer operated in the cryptocurrency space, offering financial services through their own trading platform. Their primary focus was facilitating the trading of digital assets directly within their ecosystem.

Their Infrastructure

The customer operated separate AWS accounts for staging and production environments. Each environment served a distinct purpose. The staging account supported pre-deployment testing, integration validation, and performance benchmarking. Meanwhile, the production account hosted the live application stack, which served end users and handled real-time workloads.

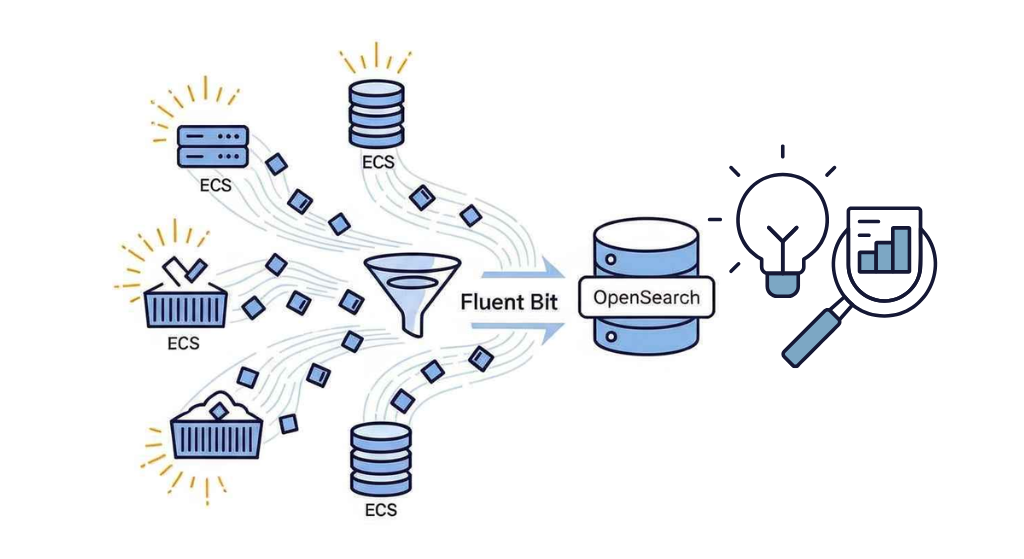

The customer ran their infrastructure on AWS using ECS Fargate for container orchestration. For data storage, they relied on a combination of services including Amazon RDS, Amazon DocumentDB (MongoDB-compatible), and Amazon ElastiCache for Redis.

The application consisted of multiple microservices, each deployed as an independent ECS service. The infrastructure provisioning and deployment pipelines were orchestrated using AWS Copilot.

To support continuous integration and continuous deployment, the customer implemented an AWS-native DevOps pipeline utilizing AWS CodePipeline, AWS CodeBuild, and AWS CodeDeploy.

The Challenge

The primary challenge was a critical CI/CD pipeline failure that blocked deployments to the production environment. Restoring deployment capabilities was the top priority to ensure the development team could continue releasing updates.

The pipeline issue stemmed from the complexity introduced by AWS Copilot which generated large CloudFormation stacks, making them difficult to analyze. After thorough investigation, we discovered that the Fargate task didn’t have enough CPU to bootstrap the application. Once we updated the task definitions and increased the CPU allocation, the immediate issue was resolved.

After we resolved the deployment issue, the customer pointed out several ongoing concerns. Below are a few specific areas the customer asked us to review and, if possible, improve.

- Growing Infrastructure Costs

- Slow pipelines execution time (usually 1.5 hours for one deployment)

- A desire to review and improve the overall cloud architecture to increase efficiency and reliability

To address these, we initiated a full review of their cloud infrastructure. During this review, we focused on finding opportunities to improve performance, reduce costs, and enhance the overall architecture.

Analysis of pipelines and Cloud Infrastructure

To perform a complete evaluation, we reviewed all major components of the customer's AWS setup to identify performance bottlenecks, inefficiencies, and opportunities for cost savings. One of the project requirements was to migrate from AWS Fargate to ECS on EC2, which required rethinking deployment strategies and resource provisioning.

The scope of our analysis included:

- Networking and VPC configuration

- CI/CD pipelines

- Data Storages (RDS, DocumentDB, ElastiCache)

- Migration plan from ECS Fargate to ECS EC2

- CloudFront configuration and caching strategy

- AWS CoPilot

- Overall cost optimization opportunities

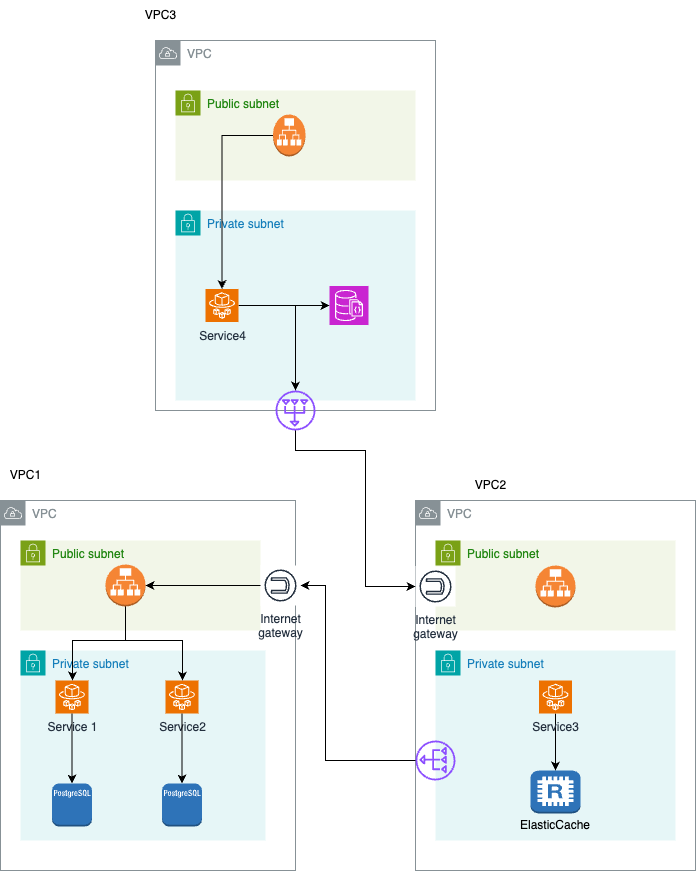

The Existing Architecture

We carefully analyzed the customer’s cloud infrastructure. To visualize the current setup, we created a detailed architecture diagram. This helped us map out all key components including networking, compute, and storage to understand their relationships. From there, we identified clear areas for improvement. As a result, we recommended changes to boost scalability, reduce complexity, and cut infrastructure costs.

According to the architecture, the customer was using multiple VPCs that communicated with each other. We couldn’t identify a solid reason for creating a separate VPC for each service, and the customer also didn’t have a clear justification for this approach. Having multiple VPCs increases AWS costs due to the need for separate components like load balancers, NAT gateways, and other networking resources that contribute to higher expenses.

To reduce costs and simplify the architecture, we suggested creating a new VPC and consolidating all resources within it. Additionally, instead of using ECS Fargate to run services, we recommended switching to ECS on EC2, which provides more control and can be more cost-effective in certain scenarios, particularly for their case.

As of March 21, 2025, AWS Copilot did not support provisioning ECS on EC2. Therefore, the new architecture was implemented using Terraform to ensure flexibility and infrastructure-as-code best practices.

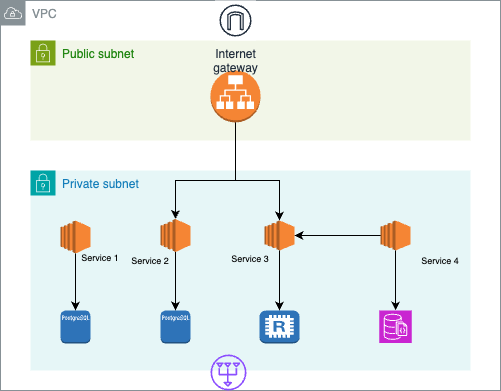

The improved solution

The newly proposed architecture involves using a single VPC to host all services, deployed using ECS on EC2 instances. A single load balancer, placed in a public subnet, will handle incoming traffic and route it to the appropriate services based on defined conditions.

Services deployed inside private subnet, can call each other directly using internal private load balancer.

This approach helps eliminate the costs associated with multiple load balancers, NAT gateways, and inter-VPC network traffic by consolidating and deploying all services within a single VPC. Additionally, the customer can deploy new services within this VPC, ensuring easier management, better scalability, and more efficient use of resources.

The following diagram depicts the proposed architecture, highlighting the simplified network design and service deployment within a single VPC.

For services, EC2-ECS will be used instead of ECS-Fargate.

The migration was completed with minimal interruption. All services — including databases, Redis (ElastiCache), and DynamoDB — were successfully migrated into a single VPC with no data loss.

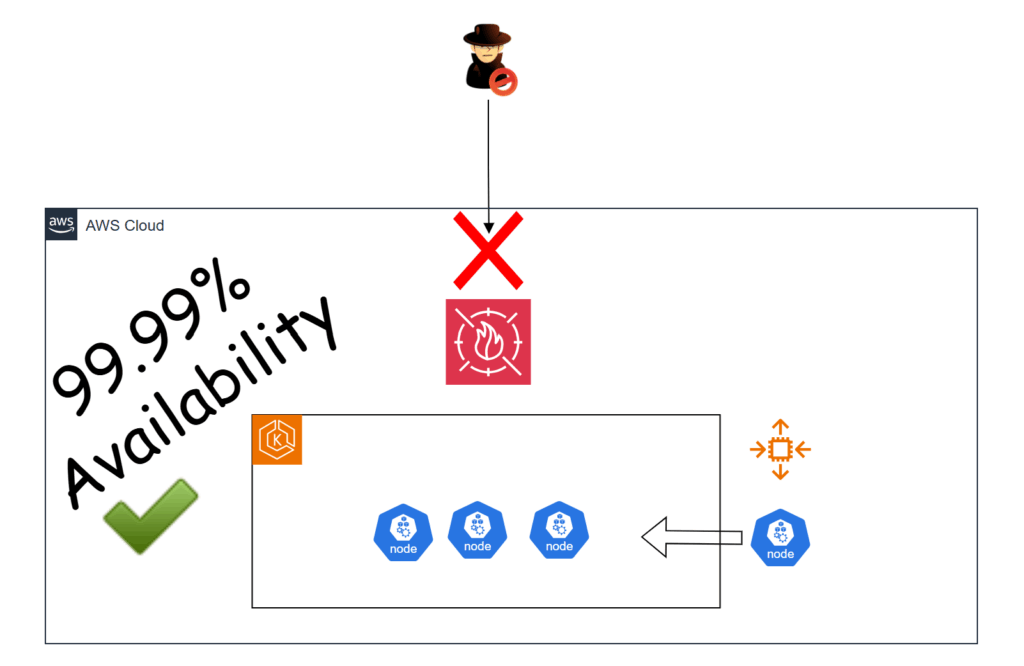

Auto scaling was implemented for both the ECS cluster and individual services, ensuring better resource utilization and improved application availability under varying workloads.

Pipelines

The customer was initially using AWS CodePipeline, along with CodeBuild and CodeDeploy. After adopting GitHub Enterprise, which offers 50,000 free GitHub Actions minutes, they expressed interest in offloading the associated costs of CodePipeline by leveraging GitHub Actions instead.

We migrated the CI/CD process to GitHub Actions, optimized the container image build process, and improved caching mechanisms — resulting in faster pipeline execution and reduced operational costs.

Deployment speed improved significantly, dropping from approximately 1.5 hours to just 17.5 minutes on average

Results and Benefits

After improving the architecture and CI/CD processes, the following achievements were made:

- 35% reduced infrastructure cost

- 5x faster running pipelines

- Auto Scaling on traffic spikes

Conclusion

We successfully addressed the customer’s deployment challenges and enhanced their cloud infrastructure. First, we resolved the CI/CD pipeline failure to restore deployment capabilities. Then, we conducted a full review of the cloud architecture, focusing on performance, cost reduction, and scalability improvements.

We recommended key architectural changes, such as consolidating multiple VPCs into a single, more cost-effective VPC. Additionally, we migrated from ECS Fargate to ECS on EC2 instances to provide better control and scalability. The migration completed with minimal disruption. As a result, the customer now benefits from improved resource utilization and enhanced application availability due to auto-scaling.

We also optimized the container image build process and improved caching mechanisms, which significantly reduced deployment times. The pipeline execution speed improved from 1.5 hours to just 17.5 minutes on average. These changes led to lower operational costs.

Ultimately, our work helped the customer reduce infrastructure costs by 35%, streamline their deployment processes, and achieve faster, more efficient development cycles. These improvements position them for better scalability and future growth.